Chapter 4

How to craft the perfect maze

Now you’ve created your Maze project, it’s time to check over what you’ve built so far and start thinking about the experience for your testers.

While we’ve created a number of features within Maze that enhance your test's experience (included in this chapter), there are some key testing guidelines you need to keep in mind.

To get the best possible results from your mazes, you need your tests to give clear instructions for tasks, know how to construct questions that don’t risk biasing your responses, and build rapport with your testers. Let's look at all of these in detail to learn how to create an effective maze.

General guidelines for testing with users

You want to make sure you’re providing testers with the best experience possible, so before you send your test out, cast your eyes over this checklist.

1. Keep the number of blocks under eight

Remote testing sessions are very different from face-to-face interviews: testers aren't dedicating a hundred percent of their focus to your product. That's great news because in real life your users won't either.

Testers give away some of their free time in participating, so their attention will drop significantly the longer your maze lasts. Based on our internal data at Maze, we’ve found that testers normally drop off after completing eight blocks. And in remote unmoderated testing, the last thing you want is testers abandoning the test for the “wrong” reasons, such as the length of your test.

So it’s a good rule of thumb to keep your number of blocks under eight and the average time to complete under five minutes.

Tip 💡

Share your draft maze with a coworker to track the time it takes them to complete it, and if it's over five minutes, you might need to simplify your maze.

2. Keep your descriptions under 140 characters

In Maze, you can add descriptions to each block type, but they’re particularly useful for Mission blocks.

A general rule of thumb with descriptions is not to go into too much detail. If you find yourself writing more than 140 characters, you're either:

- Providing too many details (e.g., "Go to page X and click on button Y"), which leads to biased results as you're telling them how to complete the task

- Creating a mission that should be broken down into two separate ones. If there's more than one task to complete in a mission, consider breaking it up into two or more missions. Focus each mission on one key task.

To keep testers engaged with your test, give them clear directions while being concise and avoiding leading descriptions. More on the last guideline next.

3. Avoid leading words in your questions and tasks

Be sure to check over your questions and look for this common mistake. Sometimes, as humans, we can frame questions that reflect our own opinions and can cause some problems when it comes to collecting impartial and unbiased results.

For example, don't frame your questions like this: "How easy do you find our product to use?” as it suggests that your product experience is easy, which might not be the case.

Instead, ask: "How was your experience using our product?" or “Describe your experience using our product.”

4. Add context and reference points

Always add context when you can. Whether you’re testing copy on a landing page or referencing a feature within the app, put yourself in the position of the tester and make it as easy as possible for them to retrieve the information or context they need to complete the test.

This doesn't mean giving them leading instructions like 'click on the X button' but providing as much context in your prototype or mockups to make the experience feel as close to using a real-life product as possible. Establish scenarios when you can by writing up the use case around a mission or task. For instance:

"Imagine you're trying to buy airplane tickets to go visit your family in Australia."

These types of scenarios will help the user get into the right mindset and understand your prototype or questions better.

You can use Context Screens to rethink the framing of questions. For example, rather than asking questions like this: "What do you think about our like feature?", you can reframe it to recall their last use: "The last time you used our like feature, how was your experience?".

5. Avoid too many closed-ended questions

Closed-ended questions elicit a "yes" or "no" answer. In answer to such questions, testers will only provide a simple answer to what is being asked. As a result, closed-ended questions will result in short replies that don't contain qualitative value. If something isn’t working, you don’t only need to confirm it—you also want to know why.

When asking closed-ended questions, pair them with open-ended questions to collect more complete and actionable answers and give your participants a chance to elaborate on their answers.

For example, don't frame your questions like this: "Do you like our new feature?"

Instead, ask: "What do you think about our new feature?" or add a follow-up “Why?” question to learn more.

6. Customize the experience for users

This can be anything from bringing your brand voice to tests, creating contextual flows in sequences of questions to the testers you choose (more on the latter, later).

User tests and surveys are an opportunity for companies to communicate with their customers—they’re an extension of your brand. Ensure any copy or branding is in line with your official brand guidelines and consistent throughout. You can add a customized Welcome Screen to give your maze a more personalized touch.

Additionally, make sure your sequence of questions and tasks make sense, check if they tell a story, are contextual to one another and relevant to the tester.

Tip 💡

Use Conditions to create custom, contextual flows based on user responses throughout the maze.

Tips for creating missions

1. Keep missions simple and straightforward

If you’re running a usability, prototype or wireframe test where you’ll be adding missions to tests, chances are that your testers are very new to the concept of prototyping and user testing. To help them, we've added the following warnings at the beginning of a maze:

- You will soon start to complete a series of missions and questions. There's no right or wrong answer; just do what comes naturally!

- What follows is a succession of interactive screens, these are designs and the final product may differ.

- When asked to complete an action, you only have to click or tap. If something doesn't respond, don't worry - this just means it's not clickable.

Even with these warnings in place, it's common to see patterns of user frustration during the first mission (misclicks, longer time spent on pages, bounces, etc).

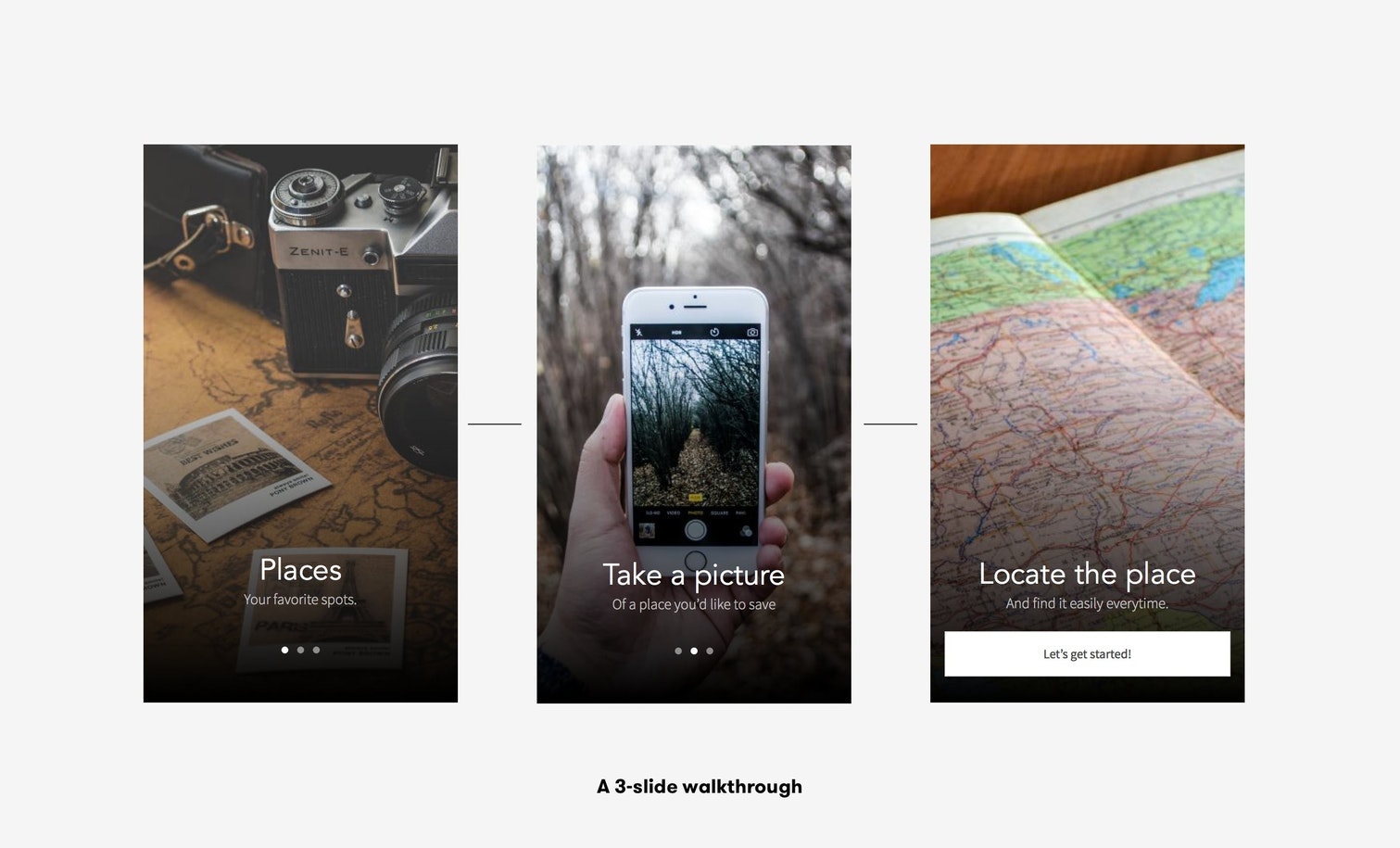

A great way to introduce the concept of testing (and Maze) to your testers is to start with a simple and straightforward mission: a three-step walkthrough usually works best.

2. Follow the user flow

Your product has been crafted to be used a certain way, so a great practice is to follow the product's natural user flow and avoid jumping from unrelated parts of your product between two missions.

To achieve this effect, try as much as possible to start a new mission with the previous mission's end screen to help avoid confusion.

Tip 💡

Change the prototype starting screen of your maze to reflect the user flow you want to test.

3. Ensure your prototype works well

This is an obvious yet very important one. Make sure your prototype doesn't have:

- Screens with no way to go back or access other pages of the prototype (i.e, no hotspots)

- Infinite loops of "Go back" events. Two following screens with "go back" events will end up looping from one to the other.

If a tester gets stuck, they are 20% more likely to bounce instead of giving up your missions. So before starting to create your maze and missions, make sure your prototype works as intended. You can always refresh your prototype if you’ve made changes to it after importing.

4. Avoid product terminology

Unless the variable you're testing for is the ability for testers to understand your product's internal language (also known as content testing), use broad, general terms to describe actions. This is especially important when testing with new users who haven't used your product much or at all.

Example:

✅ Do: "Post a new status update!"

❌ Don't: "Send a Wuphf!"

5. Define mission success KPIs

A great way to make the most out of the Maze data is to create a sheet beforehand where you can define what you expect for each mission, KPI-wise.

Examples of mission KPIs:

- Direct success: > 75%

- Average time: < 12s

- Misclick rate: < 20%

After your testing session is complete, compare your expectations to the collected KPIs and see where your design can be improved.