Chapter 3

Creating an effective concept testing survey

In this chapter, we look at the key concept testing methods and how to create an effective concept testing survey.

Concept tests come in many forms: focus groups, interviews, but by far the easiest and quickest type to implement is a survey.

Crafting an entire concept testing survey from the ground up may sound like a daunting task at first. Where do you start, and what should you include in the test? Luckily, we've compiled some of the key elements of a concept test below to help you answer those questions.

You will likely end up changing your concept test over time to better suit your needs or to help respondents understand your questions better.

“Start with a few minor iterations until you are sure the testers understand your test questions correctly,” says Shelly Shmurack, Product Manager for Walmart Global Tech. She adds that in order to run successful concept tests, your team should "iterate, learn, and adapt quickly."

With a well-thought-out concept test on your side, you can go on to reap the benefits of the data you gather, whether that means scrapping the product concept altogether or moving forward with confidence.

Let’s start by understanding the four main survey concept testing methods.

Survey concept testing methods

Choosing the appropriate concept testing method is key. However, which one works best for you will depend on your resources and what insights you need to get.

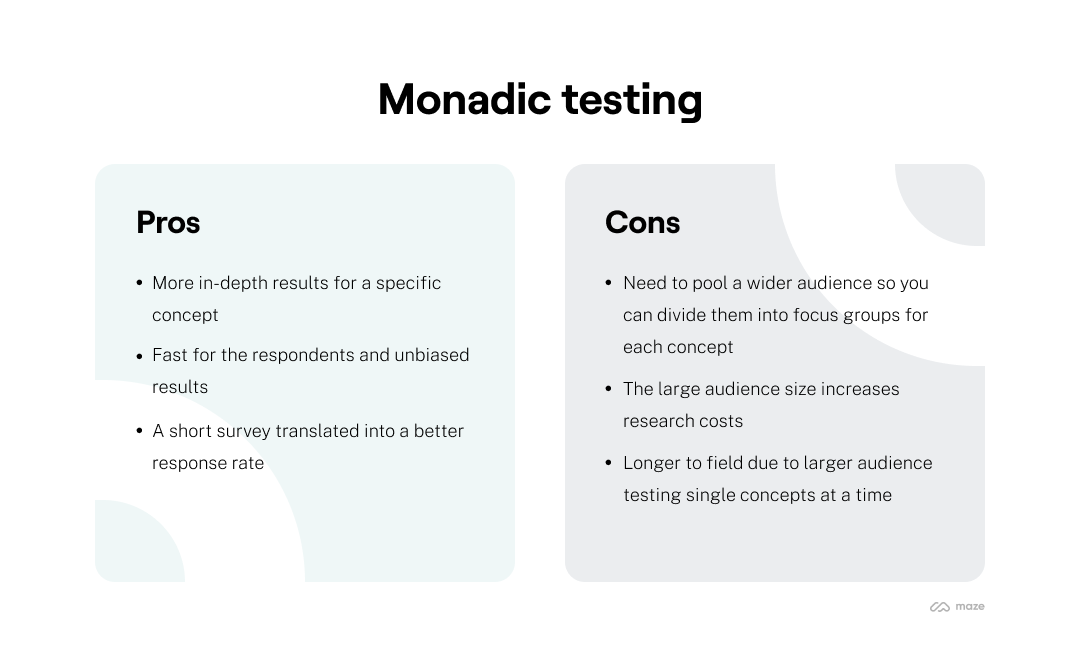

1. Monadic testing

A monadic test involves dividing test participants into groups and showing each group one concept to answer in an in-depth survey on the concept. So, for instance, if you’re testing various feature designs, give each group only one design variant to review and share insights on.

Since each focus group reviews one variant, responses tend to be unbiased, detailed, and highly targeted. The response rate also tends to be high since these concept testing surveys are short.

But here’s the thing: the more variants you have at hand, the larger the audience you’d need for the test as you’d be dividing them into focus groups for evaluating one concept each. This counts as a downside for those with limited resources as a larger participant pool increases research costs.

Monadic testing

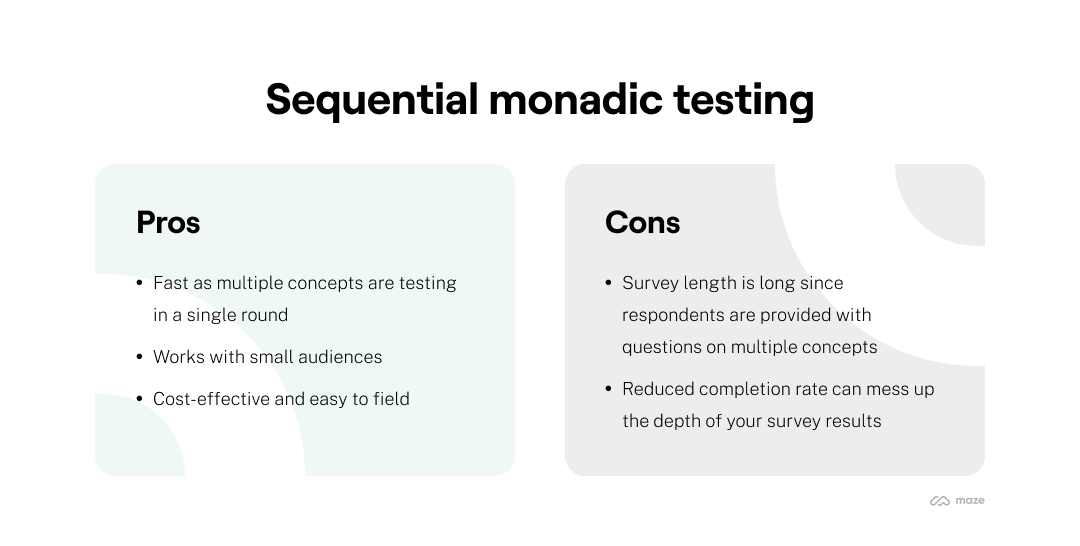

2. Sequential monadic testing

As with monadic testing, sequential monadic testing requires you to divide test participants into groups. The chief difference, however, is that all groups are shown all the concepts at once.

This way, the audience size doesn’t have to be large. And, to reduce the odds of bias, the order of variants shown to participants is randomized.

Sequential monadic testing is great for teams with budget constraints and the availability of a smaller pool of test participants.

But, be prepared, handing over a lengthy survey to your test respondents comes with its pitfall of reduced completion rate.

Sequential monadic testing

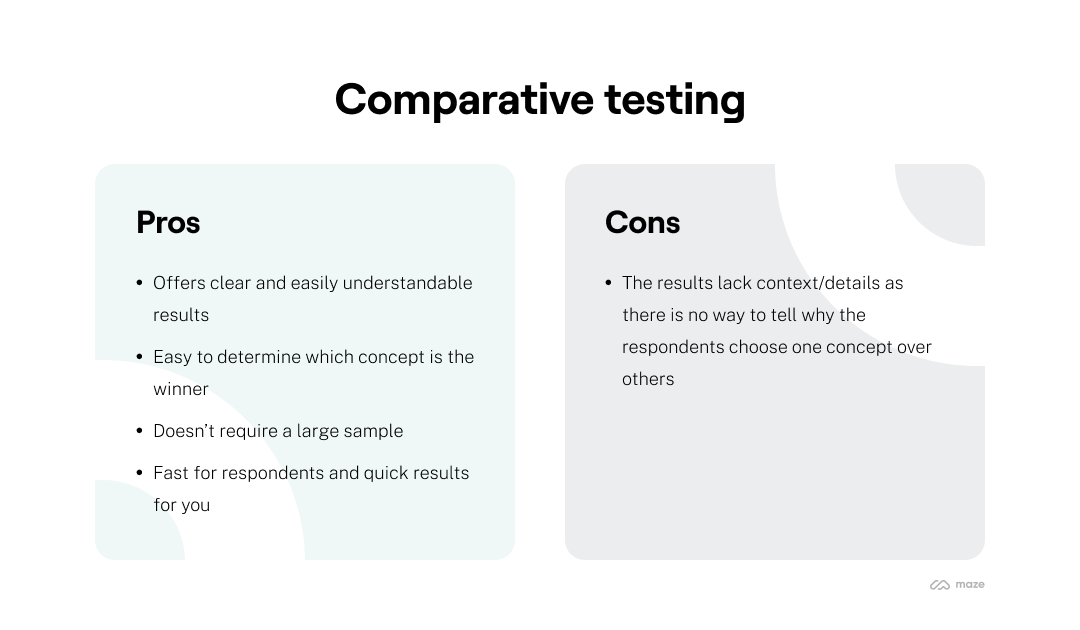

3. Comparative testing

This one’s the simplest of all the concept test methods since you share two or more concepts with survey respondents and ask them which they like the best.

With comparative testing, you have two options to get your audience’s insights. One: use rating questions with a common scale to ask respondents to compare test variants.

A rating question will read along the following lines for an example feature ideas test:

Please rate the usefulness of the following feature ideas on a scale of 1-10 where 1 is not useful at all and 10 is the most useful.

And, two: use ranking questions. These survey questions ask respondents to compare variants by arranging them in order of their preference. So, for the same feature ideas example, a ranking question will read:

Please rank the usefulness of the following feature requests, with the first one in your list being the most trustworthy and the last one being the least trustworthy.

Alternatively, use a Likert scale that asks respondents to rate (usually on a point scale of one to seven) how much they agree or disagree with a statement you give them.

With comparative tests, you don’t need a wide respondents sample size, which makes it a budget-friendly concept test method. The only kicker? Results from this test lack context as they don’t explain why respondents choose one concept over another. This also means that you don’t get sufficient details to improve your concept. That’s where protomonadic testing might come in handy.

Comparative testing

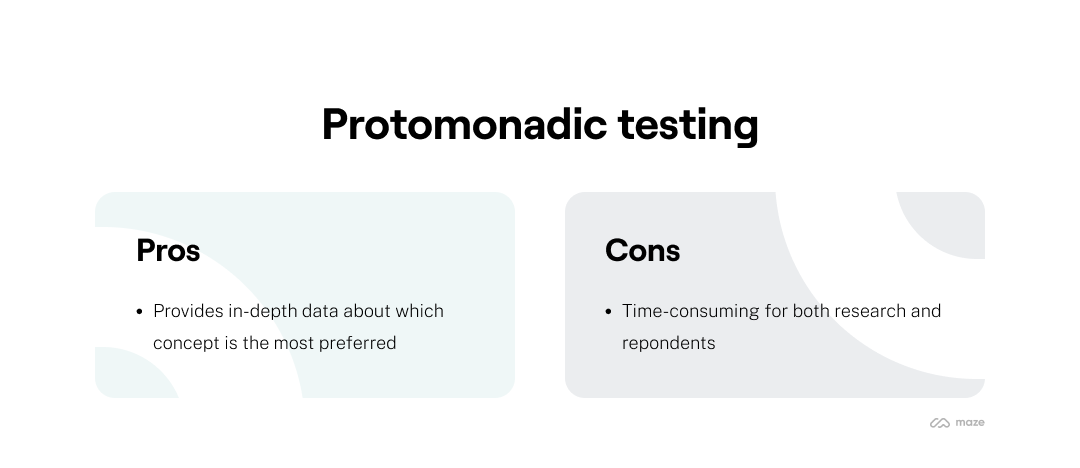

4. Protomonadic testing

This method involves asking respondents to evaluate different concepts, followed by doing a comparative test to summarize which variant they prefer.

Put simply, a protomonadic test is a combination of sequential monadic and comparative tests.

In a sense, this test makes up for the shortcomings of comparative testing by quizzing respondents about the concepts.

So, in a concept test on a web page redesign, for example, participants will be first divided into focus groups with each seeing all the designs and sharing their observations by answering a lengthy questionnaire. This will then be followed with another survey–one that asks them to either rank or rate their preferred design.

Naturally, these concept tests are lengthier than other tests as they pack two tests into one. This also translates into more time and work for both the researchers and respondents who have to prepare more test surveys and answer more questions, respectively.

Not to mention, all the time that this research method takes can increase the risk of nonresponse bias and reduced completion rate. However, if getting both qualitative and quantitative data is important for you, then protomonadic testing is the way to go.

Protomonadic testing

Key elements of a concept testing survey

Brevity helps make for an effective concept testing survey. A concise concept test will prevent tiring your participants out or ending up with scattered learnings. Shelly explains:

Make the test short and fast to answer. Ask the right questions, and nothing more.

Shelly Shmurack

Product Manager for Walmart Global Tech

Share

While not all of the survey elements listed below may be right for your concept test, weigh up which of these components will help you gather the necessary results and choose accordingly.

1. Clear definition of what you want to learn

Before you start creating the survey itself, outline what you and your team are aiming to learn, as well as “the limitations of the test, meaning, what you are not going to learn,” says Netali Jakubovitz, Senior Product Manager at Maze.

“It's important to have these clearly set up, so that you won't bias the test and the answers to false learnings, and eventually to wrongful decision making,” Netali continues.

A clearly defined goal will help guide the creation of your questions. Think of what you’re aiming to learn as the destination, and the test as a map that will lead you there.

Beware of any misleading questions or ill-conceived phrasing that can lead you astray. While it's tempting to try to learn as much as possible, keeping a focus on your target is of vital importance.

Grainne Conefrey, Product Manager at NewsWhip, advises that you keep from "holding on to questions out of curiosity - there is always one question you’d love to sneak in! If the question won’t provide you with information that will help you to make a decision on the concept, remove it."

2. Introduction and context

Introducing your survey respondents to the concept is important in order to give them context. Grainne recommends that you use “a clear intro that provides the customer with an accurate description about the concept.”

This can be done using a combination of a written description and designs, depending on your concept test format. However, be careful not to go overboard when introducing participants to your idea. If you start giving them a marketing pitch about how fantastic your product is, you might add bias to your results. Simplicity is key so that you capture your target audience's authentic reaction to the concept.

3. Screening questions

The results of the survey are necessary to determine how your target audience feels about your concept, and it also serves as a way to break down which demographic it most appeals to.

By asking a few key questions about your respondents’ demographics and consumer behaviors, you can enhance your segmentation analysis. Key insights about which groups feel most strongly about certain features can inform marketing strategies for your concept and aid a successful product launch.

Segmentation questions also help to ensure that the respondents fit the target audience description of the concept. “Make sure you have qualified participants and are targeting the right audience; screening questions at the start of a survey can help to filter out participants,” Grainne says.

4. Concept validation questions

There are several ways you can determine respondents’ reactions to your concept. You can ask for their likes or dislikes about the concept or, alternatively, have them evaluate the idea against a list of attributes. The Likert scale is a common rating system and an approachable, data-driven way to assess participants’ reactions. Questions could include:

- How useful is Concept A, on a scale from 1 to 5 (1 being the least useful, 5 being the most useful)?

- How trustworthy is Concept A, on a scale from 1 to 5?

- What are your favorite aspects of Concept A?

- What are your least favorite aspects of Concept A?

These questions usually serve as the core of your validation and learnings. “After sharing the context and allowing the tester to see the suggested interface, we would guide them to the two to three main questions we aim to validate with the test,” Shelly says.

Crafting these questions requires a careful balancing act, Netali cautions. She advises trialing the test with people from your company prior to testing the actual respondents to help smooth out any internal biases or poorly worded questions.

Netali explains: “Run your test with an internal team to make sure the questions are well understood, the survey is not overwhelming or tiring, and that the way you collect answers to specific questions would allow you to process them and inform decision making later on.”

“For example, would open-ended questions enable data processing? Would multi-select questions generate confusion instead of contributing to focus?” she adds. “Do you really want to enable that ‘other’ option? All of these are valid, but should be done intentionally.”

Tip 💡

For more on survey design principles, check out this guide from the Head of Research at Stripe.

Grainne agrees, recommending: “It’s always good to get a few different sets of eyes to read through the questions and help you to remove any bias or leading questions.”

5. Market and competitor assessment

Part of your justification for your concept will likely include comparisons to competing, substitute, and/or complementary products.

Asking a couple of questions about respondents’ awareness of existing products and their related consumption habits can give you more insight into how your concept stacks up against the status quo. Questions about the competition can further validate your concept’s viability in the market, or signal that it’s time to go back to the drawing board. Here are some examples of questions you could ask regarding competing products:

- How often do you use Concept B (competition)?

- How often do you purchase Concept B?

- Which product are you more likely to buy?

- Which product would you use more often?

Remember to keep out any biased language that could color your results. This lesson is important to remember throughout the creation of your concept test, but it’s especially easy for bias to creep in when you’re comparing your product to a competitor.

“Make sure you ask questions that would not bias the results, like providing a hint in the question itself or including positive/ negative adjectives in the body of the question when asking about an emotional reaction,” Netali reiterates.

6. Likelihood to purchase questions

Ultimately, the aim is to have customers buy your product, so questions related to your target audience's likelihood to purchase are always a good idea.

Examples:

- How likely are you to recommend this product to a friend?

- How would you prefer to purchase the product?

- How would you price this product?

7. Situation evaluation

Situation evaluation can add another dimension of insights to your concept test. Asking where or when survey respondents would use the product and how often they’d use it might further validate your concept, or identify where there’s room for improvement. Possibly there's a scenario your product would be useful for that you and your team haven't even considered, but that naturally occurs to consumers.

Situation evaluation provides more in-depth analysis, so consider adding it to your concept test survey. Here are some potential situation evaluation questions you could use:

- Where would you use Concept A?

- When would you use Concept A?

8. Thank you message and future participation

End the survey with a thank you message and, if needed, a question asking them if they would like to participate in further tests. Often this will involve some sort of monetary incentive, like a gift card. Since concept tests prove important at multiple stages of the product development process, it’s useful to have a large bank of willing participants at the ready.