Chapter 3

How to overcome cognitive bias in user research

Overcoming cognitive biases and preventing them from damaging UX research is challenging, but this chapter is here to help. In this part of our guide, we'll explore how to identify and confront different cognitive biases, and prevent the subtle ways they can impact UX.

Cognitive biases shape how we interpret data—often unconsciously. In UX research, these mental shortcuts can lead to flawed insights, misleading conclusions, and products that miss the mark. So, how can you eliminate them? Let’s find out.

How to overcome different types of cognitive biases in UX

To run unbiased, accurate UX research, you need a structured approach—one that challenges assumptions, diversifies data sources, and ensures neutrality at every stage. These strategies will help prevent common cognitive biases from distorting your insights and improve the decision-making process.

Need a quick summary?

✅ Use neutral questions—no leading language

✅ Start broad, then narrow down research focus

✅ Challenge assumptions before research begins

✅ Watch user behavior, not just their words

✅ Mix up question order to prevent order bias

✅ Get peer reviews to catch blind spots

✅ Diversify research methods—don’t rely on one data source

1. Avoid asking leading questions

Biases this impacts: anchoring bias, framing effect, serial-position effect, question-order bias, peak-end rule, clustering illusion

Providing objective insights ensures recommendations are based on evidence and not opinions.

The way you frame a question influences the answer you get. If you ask, "How much do you like this feature?", you’re already assuming the user likes it. Things like anchoring bias, the framing effect and leading question bias can all appear early on when creating your research questions, conducting interviews, and reporting on results.

How to avoid it:

- Use neutral wording: Avoid adjectives like good/bad that steer answers. Construct the right research questions to guide your research

- Start broad, then narrow down: Don’t assume facts too early

- Randomize question order: Divert participants from picking the first or last option to prevent attentional bias and question order bias

- Test your questions: Get a second opinion to spot unintended bias

2. Don’t make assumptions about users

Biases this impacts: confirmation bias, false-consensus bias, fundamental attribution error

It’s easy to assume that users think like you—but they don’t. If you build research around assumptions, you risk recruiting the wrong participants, asking the wrong questions, and making flawed design decisions.

For example, if you assume “Most users prefer mobile over desktop,” you might focus research only on mobile users, missing key insights from desktop users. The result? A product that works well for some but fails others.

How to avoid it:

- Question your assumptions: List everything your team thinks is true before starting research. What do you actually know vs. what you assume?

- Set clear research goals: Define UX research objectives–what you’re trying to learn—vague or unfocused research invites hindsight bias and weak conclusions

- Diversify participant recruitment: Include users with different demographics, behaviors, and experience levels. Use tools like the Maze Panel to filter and find the right audience from three million B2B and B2C participants in 130+ countries, so you can capture different points of view.

- Use mixed research methods: Pair qualitative interviews with quantitative data to validate findings

- Write open-ended research questions: Yes/no questions reinforce self-serving bias—let participants express their real thoughts

- Review research with fresh eyes: Have a neutral team member review questions and findings to spot bias

- Document insights in real-time: Memory can be biased—take structured notes and record sessions when possible

- Leave room for pauses: Let participants think before they respond to avoid influencing their answers

Recruit the right users at the right time

Recruit from a diverse panel of over three million participants and collect high-quality responses to shape and speed up your decision-making.

3. Reduce experimenter and participant bias

Biases this impacts: empathy gap, social desirability bias

Cognitive biases don’t just affect participants’ answers—they also shape researchers’ decisions. Experimenter bias happens when your own cognitive biases shape how you conduct research, interpret data, or interact with participants.

Participant bias occurs when users modify their responses based on how they think they should answer.

For example, if a researcher nods or reacts positively to an answer, participants may overestimate their agreement and adjust their responses accordingly. Similarly, in unmoderated research, poorly phrased surveys can introduce availability heuristic errors—where users rely on recent experiences rather than reflecting on long-term behavior.

These biases show up in both moderated and unmoderated research, but in different ways.

How to avoid it:

- Limit reactions during research: Avoid nodding, smiling, or showing approval when participants respond

- Use neutral body language and tone: Your presence as a researcher can influence how participants react

- Encourage honest feedback: Remind participants there are no right or wrong answers

- Use a structured approach: Standardize research scripts and question formats to avoid inconsistent phrasing.

- Have multiple decision-makers review findings: Collaboration reduces blind spots and prevents a single researcher’s biases from shaping conclusions

- Alternate research facilitators: Different moderators bring different viewpoints and reduce the halo effect in responses

- Incorporate diverse perspectives: Involving researchers from varied backgrounds minimizes attribution bias and provides a broader view of user behavior

Tips to avoid cognitive biases in user research

A lot of the ways you can overcome cognitive biases ultimately come down to being aware of you and your team’s assumptions, and employing UX research best practices. Recognizing these biases early helps make better decisions and ensures insights reflect real user behavior, not researcher assumptions.

Here’s how to minimize unconscious bias in your research process.

List assumptions with your team early on

Before research, list hypotheses about users. Assumptions may seem logical but can introduce self-serving bias, where teams unknowingly favor information that aligns with past experiences.

Start broad with your assumptions, then funnel down and get specific. Instead of assuming users will prefer a new feature, ask, “What evidence do we have that supports this?” Documenting these biases makes it easier to spot blind spots in decision-making before they influence the research process.

Create a research plan with objectives and goals

Without clear objectives, teams often cherry-pick or anchor onto the first piece of information they collect. For example, if a research team tests a new checkout flow and finds that five users complete it quickly, they might assume the redesign is successful. However, they risk misinterpreting early data and ignoring broader usability issues without defining success criteria upfront—like measuring drop-off rates across 100 users.

Define UX metrics like task completion rates, error rates, or engagement time to ensure findings reflect real user behavior. Teams also fall into false-consensus bias, assuming findings apply to all users when they come from a narrow group. Testing only with tech-savvy users might suggest an interface is intuitive, but less experienced users could struggle. Standardizing participant diversity prevents bias from skewing insights.

Conduct research as a team

When one person conducts and analyzes a study alone, their personal biases shape how they frame questions, interact with participants, and interpret results. Ask different team members to review the same data independently. Rotate facilitators to prevent observer bias, where a researcher’s presence influences participants’ responses.

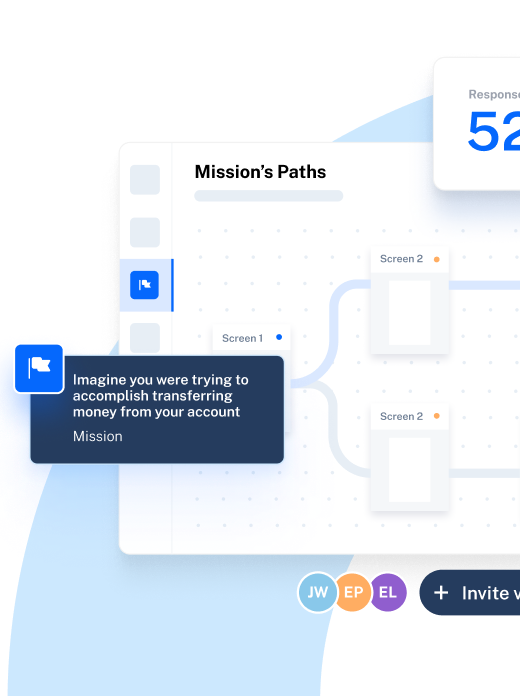

With Maze, you can invite multiple researchers to comment on reports, approve maze blocks, and review findings together in real-time. Plus, you can assign roles and access permissions, making it easier to include stakeholders, clients, and external experts in the research process.

Question people’s reasoning

Users often rely on heuristics (mental shortcuts) rather than critical thinking. If a participant says they like a design, ask “Why?” or “What makes it better than alternatives?”. Digging deeper exposes whether their feedback comes from real experiences. Compare self-reported data with behavioral insights to see if users act the way they say they do.

Refrain from oversharing details and emotions

Go through your research materials, including your research plan, testing script, and research questions, and comb through for any emotive wording or biased language. Remember the framing effect and consider how your language could prime participants to think in certain ways.

When it comes to moderating and conducting user research, focus on using neutral phrasing and body language, such as “Thanks for sharing” or “I appreciate your feedback” to acknowledge participants’ responses, rather than say anything which sways them one way or the other.

Provide space for your participants

Allow your participants time. You want to avoid influencing participants’ answers at all costs, which may mean sitting in silence, and giving people a chance to recall their experience, form their thoughts, or structure their ideas, before jumping to conclusions or finishing their thoughts for them.

And remember, if your participants appear fatigued or overwhelmed, remind them of their right to withdraw, or give them a break—answers impacted by a lack of energy are biased, too.

Make user feedback effective by removing cognitive biases

Removing biases from your research empowers you to deliver accurate insights to your product teams, increasing the productivity, accuracy, and effectiveness of your product.

We live with cognitive biases in all areas of our life, but they can strongly hinder product development. By understanding your own biases and knowing how to spot them in others, you’re already well on your way to breaking down the biases that crop up in your UX research.

The fundamental goal of all products is to serve their users, and to provide an exceptional user experience—by ensuring your research is free of bias, you ensure the decisions that influence the product are accurate, honest, and impactful.

Frequently asked questions about overcoming cognitive biases in user research

How do you overcome cognitive biases in user research?

How do you overcome cognitive biases in user research?

The first step to overcoming cognitive biases is being aware that they exist, then noting the ones that may be present in your own subconscious. Biases are especially challenging to note because of how deeply-rooted in our subconscious they are—but by looking out for certain signs, you can identify and combat biases in UX research:

- Identify your own assumptions with your team early on

- Create a research plan with clear objectives and goals

- Conduct research and analysis with a colleague

- Question people’s reasoning and ask for evidence

- Refrain from oversharing details and emotions

- Provide space for your participants to form their own opinions