As research teams scale, many find themselves producing more insights than ever—yet struggling to see them shape strategy or roadmaps. According to Maze’s 2025 Research Maturity Model, most organizations plateau at Level 3: Developing, where research is valued but rarely drives change.

In this article, we explore what it takes to break through that ceiling—why process alone isn’t enough, and how modern research teams can progress to Level 4 and beyond, where insights become integral to decision-making and business strategy.

TL;DR: From Process to Impact in UX Research

Common stall point: Most teams plateau at Level 3 research maturity.

Problem: Strong processes, but little roadmap or strategy influence.

Shift required: Research must support live decisions, not just deliverables.

How to break through:

- Start with stakeholder risks and priorities

- Frame insights as business levers (risk, cost, momentum)

- Deliver quick signals during decision windows

- Co-synthesize with stakeholders for shared ownership

- Align cadence with sprints and OKRs

Outcome: Research becomes a driver of change, moving teams toward Level 4 maturity and beyond—where insights shape strategy.

You did everything right. So why did nothing change?

You ran the research.

You got the insights.

You wrote the deck.

And then…nothing moved.

No roadmap shift. No feature reevaluation. No follow-up questions. Just a slow fade into the Notion archive. You checked every box a “mature” research team is supposed to check, and still, you feel like a service desk.

This isn’t just frustrating. It’s draining. It makes you question your competence, your role, your entire field. Maybe you’ve started sharing less often. Maybe you roll your eyes when someone asks for a “quick usability test.” Maybe you’re just tired.

You’re not alone.

Maze’s 2025 Research Maturity Model shows that most organizations live in the middle. They’ve moved beyond basic usability tests and skepticism but haven’t figured out how to make research part of the company’s bloodstream. Most are stuck in Level 3: Developing.

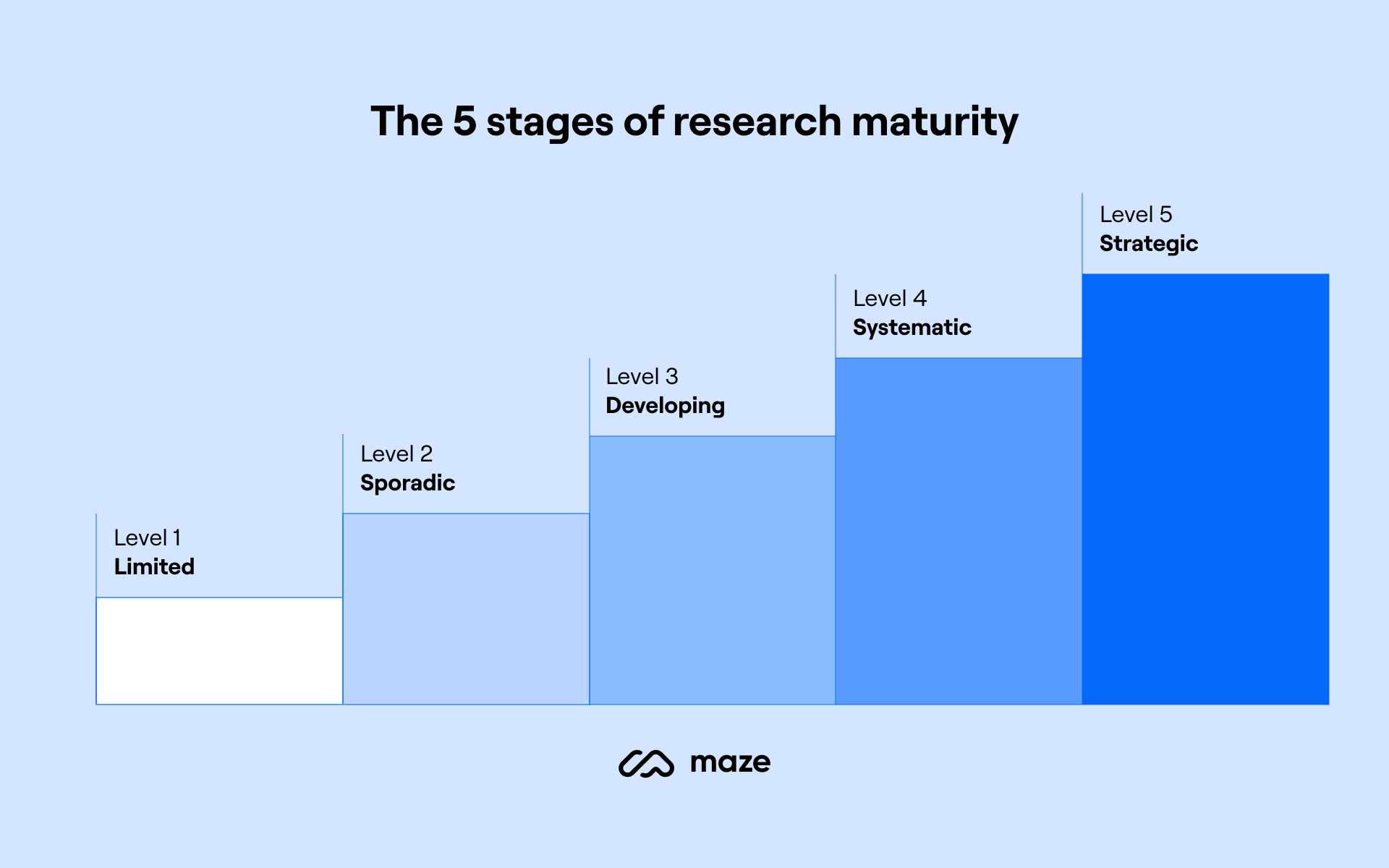

Maze’s 5 levels of research maturity

Level 1 Limited: No dedicated research. Teams rely on analytics and support tickets.

Level 2 Sporadic: Research happens, but it’s ad hoc. Mostly used to validate decisions already made.

Level 3 Developing: Research begins earlier. Teams use it for exploration. Influence is fragile.

Level 4 Systematic: Research is continuous, visible, and part of the product lifecycle. Teams ask for it. Roadmaps shift because of it.

Level 5 Strategic: Research informs company direction. It��’s tied to business outcomes and has executive sponsorship.

Most researchers live in Level 3. They’re included in processes, but not shaping bets. They’re running more studies, but not shifting power.

That’s where this story begins, with two researchers. Both capable. Both well-intentioned. Both hired to raise maturity. Only one managed to change anything.

Meet Jack and Diane

Jack and Diane joined the same mid-stage B2B SaaS company in the same quarter. The business had big growth targets and rising churn. The product team had doubled in size, and executives wanted more “user insight.”

Jack was brought in for structure. In interviews, he talked about rigor, repeatability, and research roadmaps. Executives liked the sound of it.

Diane was brought in because someone said, “We need someone who can drive change across the org.” She had spent most of her career in messy startups and agencies where documentation never moved the needle unless you walked into a room and made it matter.

They rarely overlapped. But over time, their approaches became a living experiment in what moves an organization beyond Level 3.

Jack’s approach: The process architect

Jack hit the ground running.

- Week one: Audited all past research

- Week two: Launched a Notion hub with project statuses, templates, and guides

- Week three: Every request was triaged, every study named, numbered, and scheduled

He built clear processes for discovery, evaluative, diary studies, and post-launch reflection. He ran weekly office hours. He offered training on asking better research questions. He even launched a quarterly showcase where stakeholders could hear the latest insights.

If you looked only at his systems, you’d say: this is what maturity looks like. Methodical, visible, measurable.

But little changed.

The studies were thorough but rarely shaped decisions. Roadmaps stayed the same. Executives glanced at his decks, then moved on. Outside the design org, few showed up for share-outs.

Jack had made research easier to access, but not harder to ignore.

Diane’s approach: Start with the system

For her first month, Diane ran no tests, built no templates, and shipped no decks.

She booked thirty conversations with stakeholders across PM, design, sales, customer success, marketing, data, and leadership. She asked questions like:

- "What's most important to you right now?”

- “What are your biggest challenges

- “What decisions are you making right now that feel risky?”

- “Where do you wish you had more confidence?”

- “What kind of evidence do you trust?”

- “When was the last time research helped you win an argument?”

These weren’t just for intel. They built trust before she ever asked for it. She paired what she heard with data already trusted by the business, so her first recommendations felt grounded.

She watched how influence moved in the company, who spoke first, who swayed decisions, who could pause a roadmap item with a single comment. Her first research project wasn’t a usability test. It was a cross-functional workshop tied to a business-critical decision: whether to pivot onboarding for enterprise clients.

She facilitated the session, dropped in just enough qualitative evidence to spark debate, and guided the team toward clarity.

That meeting changed the roadmap.

Not because the evidence was groundbreaking. But because it created a shared language for making decisions. Research became the lever for alignment. Over the next two months, Diane ran three more small studies. Each was designed not just to answer a question, but to shift momentum on a live decision.

A tale of two maturity models

Jack was squarely in Level 3: Developing.

At this stage:

- Research happens earlier in the process

- Teams value exploration, not just validation

- Methods diversify

- Stakeholders attend readouts

- Researchers are included in projects, not strategy

Level 3 feels good. Processes are in place. Visibility is up. But influence plateaus. Researchers stay busy, but the business impact is shallow. This isn’t a ceiling, but process alone doesn’t lift a team to Level 4.

Diane was already laying groundwork for Level 4: Systematic. She was:

- Creating continuous touchpoints

- Embedding synthesis in decision-making

- Framing findings as risks, opportunities, trade-offs

- Pulling research into roadmapping

- Coaching stakeholders to ask sharper questions

Jack made research accessible. Diane made it relevant.

Why jack’s work doesn’t move the needle

On paper, Jack’s work looked perfect. Roadmaps aligned, studies scoped, decks polished, repos tagged. Colleagues thanked him in Slack. Yet when decisions came, the team kept moving as they always had.

Jack’s calendar told the story:

- Monday: Interviews

- Tuesday: Synthesis in Notion

- Wednesday: Deck prep

- Thursday: Share-out

- Friday: Scope next project

Full and productive. But no roadmap reviews, no go-to-market planning, no executive syncs. He was solving problems after they were defined, not shaping what problems mattered.

His repository was clean, but unused. An “insights folder” that no one opened unless prompted. His research was rigorous, but not indispensable. For example:

B2B:

Jack interviewed sales reps, synthesized pain points into personas, and presented them to PMs. They nodded, said “helpful context,” then pushed the same roadmap set by last quarter’s OKRs. His insights didn’t connect to sales funnel urgency.

B2C:

Jack ran a usability test on onboarding. Users didn’t understand the value prop until step 4. He clipped videos and wrote clear recommendations. The growth PM said “we’ll test that later” and shipped a small copy tweak. The team was focused on paid acquisition, not onboarding drop-off.

The issue wasn’t rigor. It was framing. Jack delivered insights as finished products. The business needed decision levers.

Are you stuck in level 3?

Most researchers are. It feels like progress, but lacks business impact.

Signs you’re here:

- Studies don’t change roadmap priorities

- You’re asked to “support” decisions already made

- Share-outs get polite attention but no follow-up

- You’re absent from strategy and go-to-market planning

- You’re documenting rigorously, but no one references it unprompted

What’s missing is the link to company priorities like revenue, retention, efficiency, and market position.

Take the Research Maturity Level Quiz to discover which level your org is at and how to advance your research program.

Diane’s playbook

1. Start with stakeholder fear, not user pain

She asked:

- “What decision feels risky right now?”

- “What would be costly to get wrong?”

- “Where are we flying blind?”

This uncovered urgency. Then she designed studies around those decisions.

💡Try it

Ask a PM: “What would you love to be confident in before launch?” Then scope a lightweight Maze test to explore it. Map the output to a metric the business already tracks.

2. Frame research as decision support

She never sent 30-slide decks. Instead she facilitated sessions with three options:

- What to do if X is true

- What to do if Y is true

- What happens if we stay undecided

She shifted research from reporting to alignment.

3. Use speed to stay relevant

She used Maze for quick signals:

- 48-hour concept evaluations

- First-click tests for interaction failures

- Copy comparisons before launch

- Quick reaction polls on prototypes

Not to prove every point, but to provoke action while the window was open.

4. Co-synthesize with stakeholders

She shared rough notes and asked:

- “What stands out?”

- “Does this match what you’re hearing?”

By inviting people into the mess, she built ownership before delivering conclusions.

💡Try it

Create a Figjam board for clustering quotes with stakeholders instead of presenting a polished theme.

5. Speak in outcomes, not findings

She tied every user moment to business levers.

- “This onboarding step confuses users” → “…which may explain why we lose 22% of signups at Step 2.”

- “This language breaks trust” → “…which is risky given our enterprise push.”

For example:

B2B:

Old: Customers found the reporting dashboard confusing.

New: Dashboard confusion created more support tickets and slowed client onboarding. Streamlining would free sales and success teams for renewals.

B2C:

Old: Users dropped off midway through checkout.

New: Checkout drop-off led participants to abandon purchases, describing the flow as “sketchy.” Simplifying could rebuild trust and prevent churn.

Dive deeper into Diane's playbook

Discover how UX research builds stakeholder trust and influence. Insights from 130+ professionals reveal what drives research impact in today’s orgs.

Moving toward level 4

Track uptake, not output

Keep a Research Impact Log:

- What you delivered

- What changed because of it

- Who referenced it without prompting

- Time from study to decision

Review this monthly with leads. Share the highlights in the same channel where OKRs get reviewed.

Step one layer earlier

If you usually test prototypes, start by testing the riskiest assumption. If you usually meet at design kickoff, insert yourself at roadmap planning and ask, “Which bet scares us, and what would we like to learn before we lock it?”

Frame insights as risk levers

Instead of “Users had trouble with search.”

Use “66% missed search in 10 seconds, which likely drags conversion. We can’t afford that heading into peak season.”

Treat research as narratives, not artifacts

Drop micro‑insights into living documents and meetings:

- Strategy docs: A one‑liner in context

- Sprint goals: A single user risk attached to a story

- Slack: A 60‑second Loom with a clip and a call to action

- Leadership update: One chart, one quote, one choice

Mirror product process and cadence

If the product team runs two‑week sprints, run studies that feed that rhythm. If the org tracks quarterly OKRs, align your research questions to those themes and deliver short briefs that slot directly into those reviews. In an AI‑heavy toolchain, speed is table stakes; integration is the difference. Research has to show up where the model outputs and business judgment meet.

Push smart democratization

Create research co‑pilots in PM and design. Give them a safe kit:

- One approved screener

- One concept test template in Maze

- A short guide on consent and data handling

- Share raw outputs with you before anyone else

This spreads practice without dropping quality or ethics. It also builds a habit of asking better questions earlier.

Switching lanes: From deliverable to driver

Jack optimized for efficiency. Diane optimized for influence.

Jack said: “Here’s what we learned.”

Diane said: “Here’s how we change.”

The difference wasn't the volume of research. It was the intention.

Shifting from Level 3 to Level 4 looks like:

- Asking sharper questions earlier

- Delivering signal inside the decision window

- Framing every point as risk, cost, urgency, or momentum

- Matching the product cadence so your work lands when it can still steer the ship

If your team runs two-week sprints, align your research rhythm with theirs. If leadership reviews OKRs quarterly, deliver insights that slot directly into those reviews.

You don’t need permission to do this. You can start now.

2025 Research Maturity Model Playbook

Advance your organization’s research practice and business impact with our new research maturity model—built on data from 500+ research, design, and product professionals.