TL;DR

AI moderation helps research teams scale interviews, reduce bias, and speed up analysis. Instead of relying solely on human moderators, research teams can deploy AI to guide sessions, adapt questions in real time, and generate instant transcripts and summaries. This makes it easier to run studies across time zones and participant groups without adding more overhead.

Researchers remain essential for empathy, contextual understanding, and strategy, while AI handles the repetitive work that slows teams down.

When research needs to move fast, traditional interviews can feel impossible—too many moderators to line up, too many hours of recordings to sort through, and insights that arrive long after decisions are made.

Enter: AI moderation.

In this guide, we walk you through how AI moderation works, what tools and workflows support it, and how to strike a balance between automation and the human oversight that keeps research user-focused and trustworthy.

What is AI moderation in user interviews?

AI moderation involves using artificial intelligence to conduct and manage user interviews. It automates user interviews, guides conversations, adapts in real time, and delivers transcripts and insights instantly—saving time and freeing researchers to focus on interpretation and strategy.

In practice, an AI moderator can help with automating user interviews by:

- Running interview sessions: Greet participants, set context, and keep the interview on track

- Asking and adapting questions: Follow a script or change course based on responses, ensuring consistency across sessions while still feeling natural

- Capturing and analyzing responses: Generate transcripts, summaries, and tagged themes for faster review

- Scaling participation: Conduct multiple interviews simultaneously, across time zones and languages

AI moderation is active; it shapes the session as it’s happening, helping teams reduce delays, improve consistency, and scale their reach.

Why use AI moderation for interviews

Most user interviews today rely on a human moderator running a live session—either in person or over video. But with 63% of respondents to our 2025 Future of User Research Report citing time and bandwidth constraints as a major blocker for research, user interviews can often fall by the wayside.

While these interviews give teams valuable qualitative depth, they’re hard to scale. Each session needs to be scheduled, moderated, and analyzed manually, which slows teams down.

AI moderation speeds things up, and the industry is ready for it.

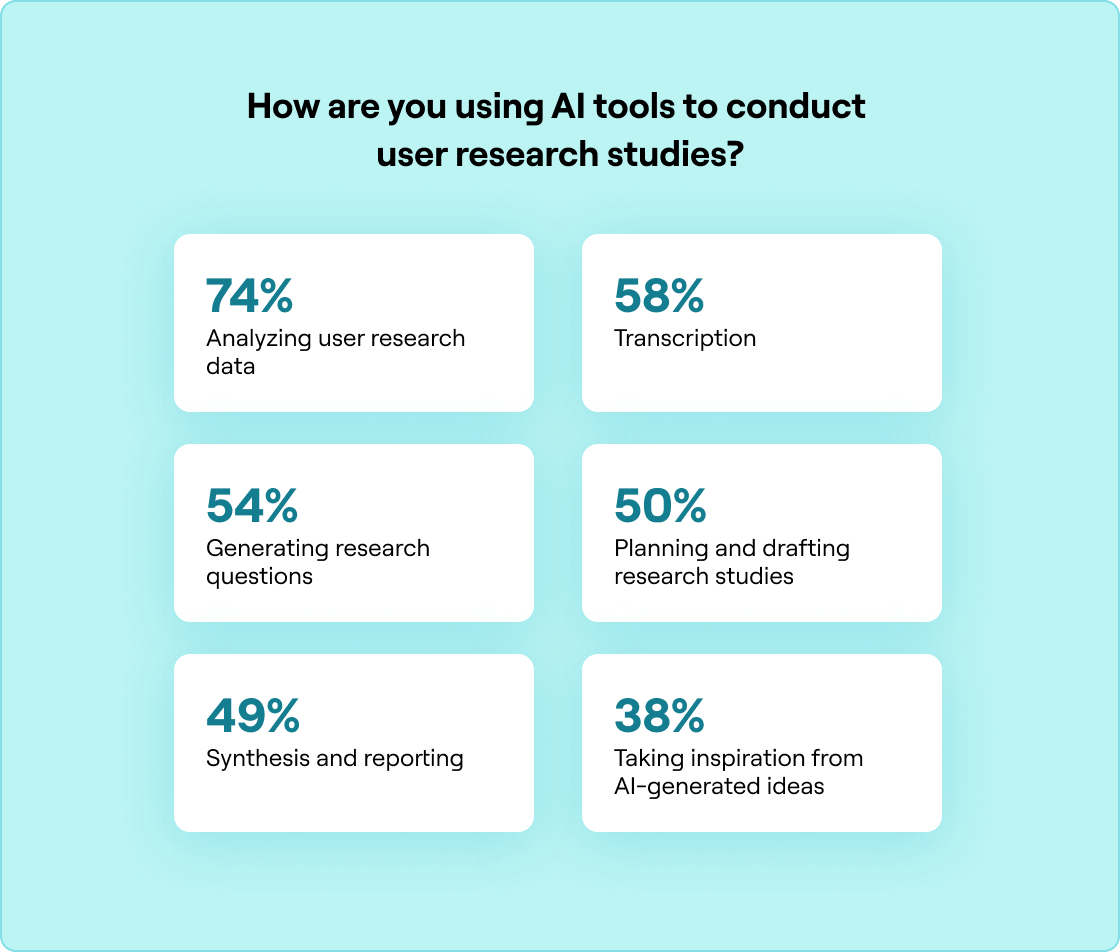

Our 2025 Future of User Research Report shows that UX teams are already using AI across the user interview process, including for:

- Analyzing research data (74%)

- Transcription (58%)

- Generating research questions (54%)

- Planning studies (50%)

With AI now embedded across these workflows, interview moderation is the next logical step towards streamlining research for busy teams.

Here�’s a quick overview of the differences between traditional interviews and AI-led user interviews:

Traditional interviews | AI moderation | |

|---|---|---|

Efficiency | Time-consuming; requires scheduling and manual moderation | Conducts many interviews at once; enables asynchronous participation |

Standardization | Varies by interviewer style, tone, or experience | Same flow and questions for all participants; reduces variability |

Cost | Higher; includes interviewer time, travel, and scheduling | Lower overall; no travel or interviewer time required |

Bias | More vulnerable to unconscious or appearance biases | Reduces unconscious and fatigue biases; minimizes social desirability effects |

Rapport and empathy | Strong rapport and context sensitivity from human moderators | AI lacks emotional intelligence and may miss subtle cues in follow-up questions |

Scalability | Difficult to scale; requires significant resources | Easily scales to hundreds of participants globally |

Flexibility | Constrained by interviewer and participant availability | 24/7 access, multilingual, no scheduling needed |

Participant comfort | Social dynamics may limit openness | Often greater on sensitive topics; reduced judgment or pressure |

Emotional and cultural nuance | Excellent at navigating ambiguous or emotional conversations | Limited in detecting complex emotional or cultural cues |

How to conduct AI-moderated studies step-by-step

From setting clear research goals to reviewing automated transcripts, each step ensures that your AI-supported interviews stay consistent, scalable, and useful. Let’s look at how you can move through the process.

1. Define your research goals

Research goals shape the questions you ask, the datasets you collect, and the moderation decisions you’ll make later. Without them, it’s easy to generate transcripts that feel more like AI-generated content than actionable insight.

Common goals to guide your study include:

- Exploration: Capture user-generated content that reveals unmet needs, pain points, or behaviors

- Concept validation: Test whether a new idea or feature aligns with user expectations and community standards

- Usability assessment: Use AI-powered tools like Maze to pinpoint where users struggle in a workflow or product journey

- Comparison: Evaluate different prototypes or designs with consistent AI models to reduce variability

- Trust and safety focus: Explore how users perceive misinformation, disinformation, or harmful content in online environments

2. Choose your interview format and platform

Once your goals are set, choose how participants engage and the platform to run the study.

There are three common interview types:

- Structured interviews that follow a fixed set of questions

- Semi-structured interviews that mix consistency with flexibility

- Unstructured interviews that allow conversations to flow more freely

The right format depends on what you want to learn and how much depth you need.

If you’re looking for a research platform that can support your interview research from start to finish, try Maze’s Interview Studies. This purpose-built solution handles everything from participant recruitment and scheduling to hosting live sessions and capturing transcripts. Maze's AI moderator handles interview sessions and adapts questions in real time. For example, if a participant hesitates, Maze’s AI moderator can rephrase; if the conversation drifts, it brings participants back on track.

3. Define participant criteria and recruitment

Start with the right people. Define criteria—like demographics, professional background, or behavior patterns—that align with your research goals. You’re not cleaning data yet. Instead, you’re preventing irrelevant data from being collected in the first place. The right participants mean the insights you gather will actually reflect your users and help answer your research questions.

Once you know who you're looking for, Maze offers two powerful ways to reach them:

- Maze Panel gives you access to over three million B2B and B2C participants across 130+ countries, with 400+ advanced filters like demographics, technology usage, finance, and shopping habits.

- Maze Reach acts like a CRM for research participants, perfect for using your own community. Upload contact lists, segment by properties or past engagement, and send targeted campaigns with tracking and reminders built in Maze.

4. Design interview guide and scripts

Your interview guide is the backbone of the study. It ensures sessions stay consistent and that every participant is asked questions that tie back to your research goals. Keep your script simple, structured, and free from bias—open with broad discovery questions, then move into specifics about tasks, features, or workflows.

💡 Not sure where to start? Our AI user research prompts adapt directly into your scripts. These examples cover discovery, usability, and concept validation, giving you a head start on phrasing effective, unbiased questions for your user interviews by AI.

5. Pilot and launch the interview

Before inviting participants, run a pilot of your AI-moderated interview. A short internal test helps you spot unclear questions, awkward flow, or technical issues that could affect data quality. Piloting is a standard best practice across methods—whether you’re running UX surveys or prototype testing, that first dry run is where you catch gaps before they reach real users.

Use the pilot to check:

- Are the questions clear and unbiased?

- Does the AI ask relevant follow-ups and stay on track?

- Is the timing reasonable for participants?

- Are transcripts, tags, and summaries generated as expected?

Once everything works as intended, launch the full study.

6. Use AI to moderate the session

Maze’s AI moderator takes care of the heavy lifting while keeping you in control. Here’s how this AI tool works:

- Plan: You set the goals, and Maze’s AI interview assistant drafts the interview guide instantly. You can edit or refine the script at any time.

- Interview: The AI runs sessions autonomously across time zones and languages, adapting dynamically to participants’ answers with contextual follow-ups.

- Supportive features: Tools like Perfect Question (AI-powered phrasing checks) and Dynamic Follow-ups help ensure the conversation flows smoothly and stays relevant.

7. Analyze results with AI

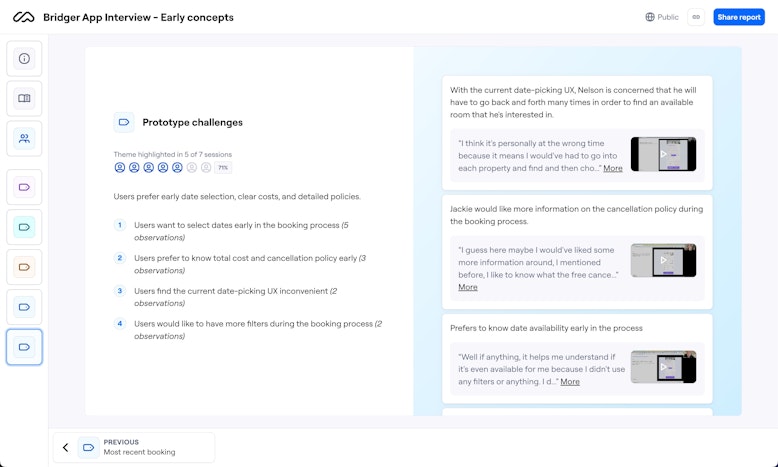

After the interview, Maze AI organizes the session into insights that are easy to share and act on. Transcripts, tagged moments, and themes are mapped back to your objectives, giving you a clear line from participant input to research findings. With automated theme analysis, you can find patterns faster and connect them directly to product decisions.

8. Deliver insights that drive action

It’s time to present and share your findings in a format that’s easy for stakeholders to understand and act on. That means curating highlights, summarizing key themes, and connecting insights back to the goals you set at the start.

Maze simplifies creating and sharing your UX research reports, with automated reporting to summarize themes and package up highlights into an easy-on-the-eyes, ready-to-share report.

Plus, reports can be shared in multiple ways:

- Public links make results accessible to anyone, even without a Maze account

- Private links keep reports restricted to authenticated team members

- Embeds let you drop reports directly into the tools your team already works in

- PDF exports provide a static version for offline access or executive decks

You can also export clips for presentations or generate shareable links for quick access.

Running AI-moderated interviews follows the same best practices as any strong research process: set clear goals, recruit the right participants, pilot your study, and keep human oversight in place.

With the workflow in place, the next question is timing: when should you lean on AI moderation, and when does a human moderator make more sense?

When to use AI moderation?

AI moderation tools are most effective when you need interviews to run quickly, consistently, and at scale, without adding more work for researchers.

It’s a strong fit for:

- Scaling insights: After running human-moderated interviews, AI can expand the reach, validating findings across larger samples or multiple markets

- Tight deadlines: Automate transcripts, tags, and themes so results are ready in hours

- High volume needs: Run many sessions simultaneously without overloading researchers

- Global research: Multilingual support lets you scale interviews across regions with less overhead

- Sensitive topics: Some participants feel more comfortable sharing openly with an AI moderator

Limitations and challenges of AI moderation

AI can simplify interviews, but its limits show why human researchers remain essential. It lacks empathy and nuance, so hesitation, humor, or discomfort in a participant’s response may go unnoticed.

Context can be misread, with slang or sarcasm taken at face value, requiring researchers to step in and correct the record. Technical hiccups like missed words, repeated prompts, or transcription errors only add to the need for oversight. Plus, not everyone will be comfortable talking to an AI moderator in the first place.

Even the best AI content moderation needs human review and assistance. Researchers must validate outputs, refine themes, and ensure insights align with business goals.

Best practices to get the most from AI moderation

AI can take on the mechanics of running interviews, but the quality of the outcomes still depends on how you set it up.

- Write great questions: AI moderators can only work with the script you provide. Avoid leading questions or jargon that participants may not understand. A clear, neutral tone reduces bias and ensures more reliable insights.

- Control pacing and tone: Participants should feel at ease. Keep questions concise and structure your guide to start broad before narrowing down.

- Personalize with detailed guidelines: AI systems need direction to adapt appropriately. Provide context, examples, and fallback options for tricky questions.

- Share insights in context: AI can summarize, but only humans can interpret. Researchers should add their perspective, highlight meaningful quotes, and connect results back to business priorities so findings drive decisions.

AI moderation and the future of user research

AI moderation is still fresh on the research scene, but it’s already reshaping how teams approach interviews.

Tools like Maze AI are showing how automation can reduce bias, speed up analysis, and scale interviews across time zones. From Maze’s AI moderator to the AI-powered thematic analysis, researchers now have access to tools that streamline their research process.

Over the next few years, these systems will keep improving using larger datasets, more advanced machine learning and natural language processing (NLP), and better generative AI to make conversations adaptive and insightful.

The future of research is about humans and machines working together to understand people better, faster, and more credibly.

It’s not one or the other.

It’s both; human ingenuity combined with machine power.

Frequently asked questions on AI moderation

Does AI moderation fully replace human researchers?

Does AI moderation fully replace human researchers?

No, AI can run sessions and speed up analysis, but researchers are still needed for empathy, context, and making strategic decisions. Human researchers are empowered by AI, not replaced.

Are AI moderators able to keep conversation quality high?

Are AI moderators able to keep conversation quality high?

Yes, AI moderators keep questions consistent and ask follow-ups, but researchers are still needed to understand tone, emotion, and complex answers.

How do we recruit participants for AI-moderated interviews?

How do we recruit participants for AI-moderated interviews?

Recruiting participants for AI-moderated interviews follows many of the same principles as traditional research—you still need to define your target audience, create screening questions, and ensure participants represent real users of your product.

With Maze, you can recruit participants through multiple flexible options:

- Use a shareable link or In-Product Prompts to invite your own users

- Access the Maze Panel, a marketplace of vetted research participants

- Invite previous research participants stored in your Maze Reach database

How is privacy handled in AI moderation, and what models are used?

How is privacy handled in AI moderation, and what models are used?

Different AI moderators use different rules and models. Maze’s AI moderator runs on trusted third-party providers under strict privacy and retention policies. It uses OpenAI and Anthropic (via Amazon Bedrock) for language and analysis tasks, Rev AI for speech-to-text transcription, and Freeplay to test and monitor AI features.

Maze does not train models on your study data—only text and audio are processed, and data is deleted within 30 days (OpenAI, Rev AI) or 90 days (Freeplay, extendable only for quality review). All AI-powered features are clearly labeled, optional, and can be disabled at the organization level. For more details, check out Maze’s AI privacy and model use.

Can we run multilingual interviews without separate guides?

Can we run multilingual interviews without separate guides?

Yes! Maze’s AI Moderator can conduct multilingual interviews, adapting questions and follow-ups in participants’ languages and transcribing responses automatically. It supports 30+ transcription languages and uses GPT-4 for multilingual summaries and themes.

However, if you need fully localized phrasing, cultural nuance, or compliance-specific wording, it’s best to create separate language variants of your guide. Maze’s setup makes both approaches easy. For more details, see this help article on non-English language handling in Maze.