A Growing Mandate: The Path to Stakeholder Trust and Research Influence

Discover how UX research builds stakeholder trust and influence. Insights from 130+ professionals reveal what drives research impact in today’s orgs.

Research at the right time, place, and language

Survey after survey show overwhelming demand for UX research across organizations (ours showed 93% of stakeholders reported using UX research findings in their decision-making and a 5.7/7 score on value). But digging deeper, there’s often a difference between expected value and delivered impact.

In addition, the role of research is expanding. Once seen as a specialist function, research is now expected to guide strategy, not just validate design. That bigger mandate is colliding with an uncomfortable implication: the old ways of working, slow cycles, siloed teams, and narrowly defined roles are leading to isolation of the practice.

When examining the difference between effective and ineffective research practices, findings revealed a growing divide between what stakeholders value and where researchers are investing time and energy.

The problem isn’t that research isn’t good enough; it’s that it’s not in the right time, in the right place, in the right language. And the unlock isn’t just about resourcing—it’s about empowering researchers to play bigger organizational roles that transform research from a specialist function into a business capability that scales across entire organizations.

Amanda Gelb

UX & Product Research Strategist, Founder @ Aha Studio

The right time: Insights at the speed of decisions

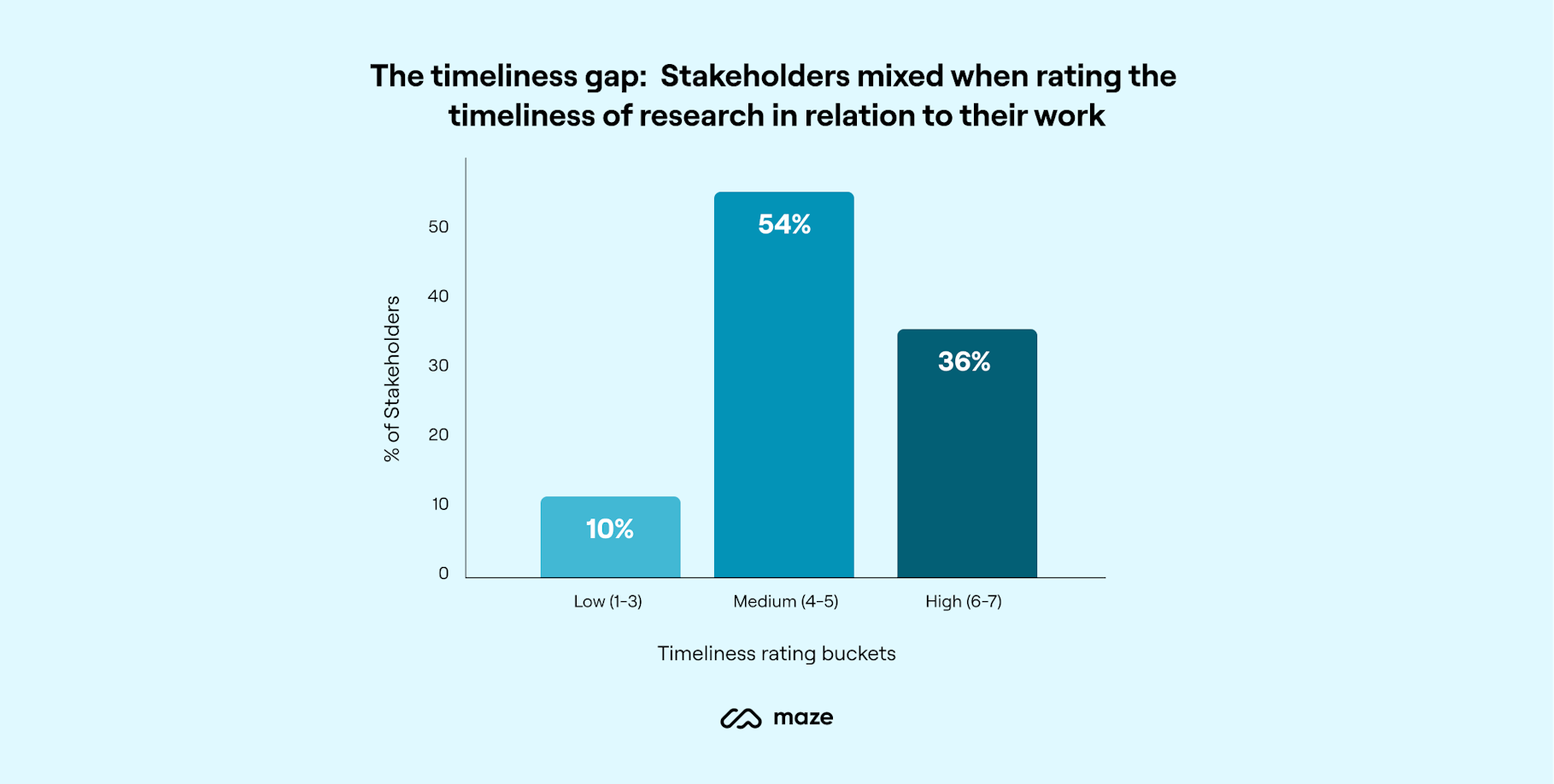

Researchers and stakeholders agree on the tablestakes: research needs to be fast, timely, and rigorous (we’ll come back to the rigor challenge later). Timeliness and speed were the most often cited challenges, with researchers stating that “tight deadlines make it hard to run proper studies” while stakeholders shared that “decisions are made before research finishes.”

When it came to solving these challenges, researchers and stakeholders had different perspectives. Researchers pointed out the need for more budget for staffing and tools, whereas stakeholders favored an approach of scaling through “integration” and involvement. The difference revealed an unlock: scale doesn’t come from adding more people—it comes from expanding roles.

Experts in the loop

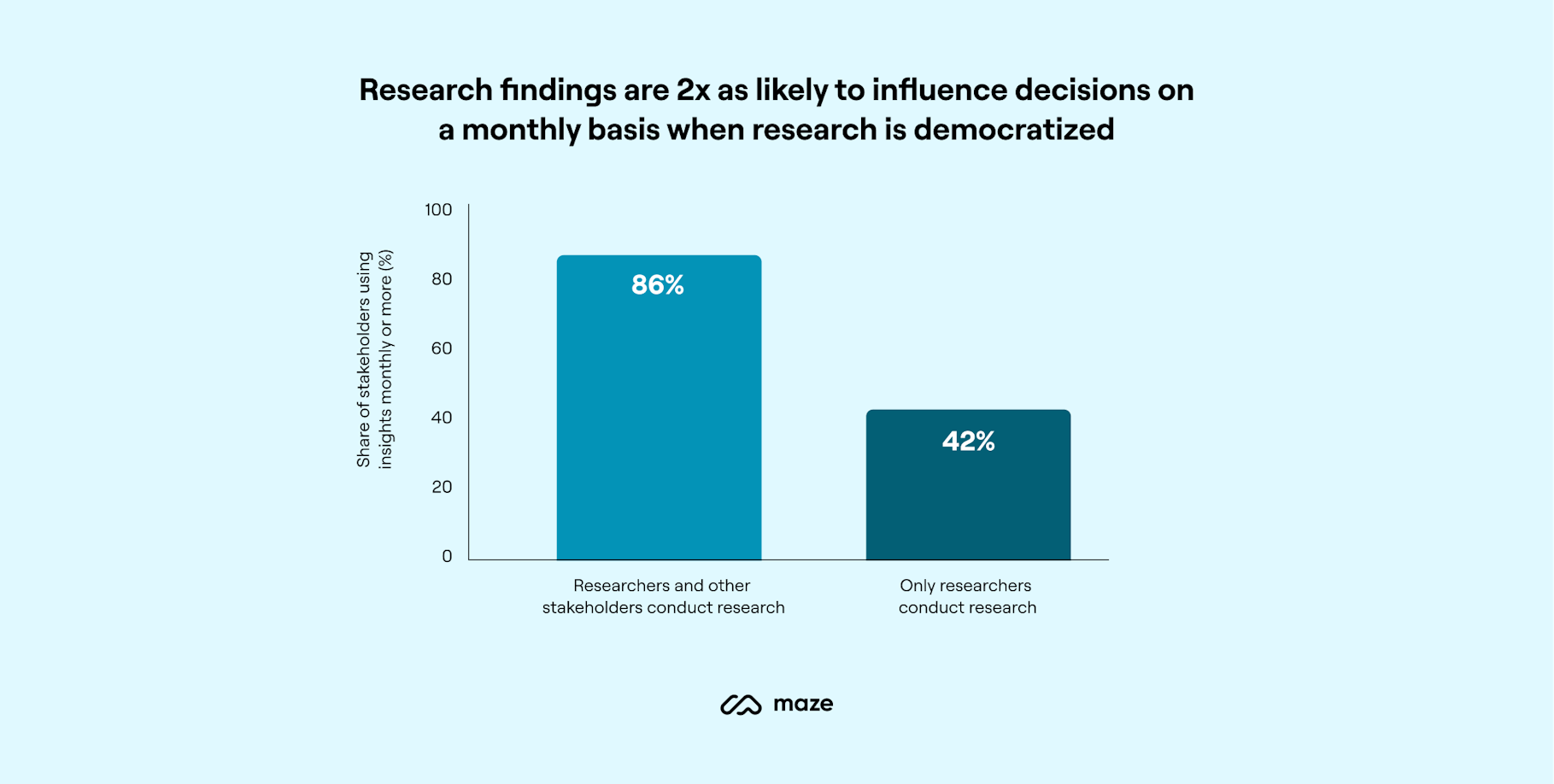

Interestingly, our sample skewed heavily toward organizations where stakeholders were involved in conducting research, with only 22% of stakeholders reporting that research lives exclusively with researchers. Even within this smaller base of non-democratized practices, the differences are meaningful. When stakeholders participated in conducting research, they were 2x more likely to use findings on at least a monthly basis (86% vs 42%). Integration scores also improved by nearly a full point (+0.8 on a 7-point scale).

The qualitative evidence suggests that democratization can lead to better integration when researchers go beyond simply handing over tools. Instead, they build systems and guardrails that allow more people to contribute while maintaining rigor. High-impact teams highlighted practices such as:

- Bringing cross-functional stakeholders into hypothesis forming

- Making findings widely accessible

- Keeping researchers in the loop as guides who ensure methodological credibility

In this model, democratization scales research without diluting it, transforming it from a siloed function into a true organizational capability.

When asked about best practices for involving stakeholders in the research process, participants pointed to the role of the researcher as an integrated “expert in the loop”, helping align decisions, methods, and timelines to meet varying needs across the organization:

“AI sped up both the generation of insights from raw data and the implementation of those insights into recommendations.”

Stakeholder Interview

“We definitely aren’t overly protective of conducting research, but we make sure methodology is respected. That’s how findings maintain influence.”

UX Researcher

“The incorporation of AI into research has been a welcome addition and has sped up the process to get insights, as well as saved a lot of time in acting on those insights.”

Stakeholder Interview

“Opening up access to research means sales and product managers bring new questions we wouldn’t have asked.”

Executive

“Research is most impactful when it’s widely distributed, not guarded.”

Stakeholder Interview

“The strongest researchers don’t just run the study—they guide us to the right method and frame results in a way that convinces leadership.”

Design Lead

“AI sped up both the generation of insights from raw data and the implementation of those insights into recommendations.”

Stakeholder Interview

“We definitely aren’t overly protective of conducting research, but we make sure methodology is respected. That’s how findings maintain influence.”

UX Researcher

The right place: Insights at all altitudes

Integration as cultural vs procedural

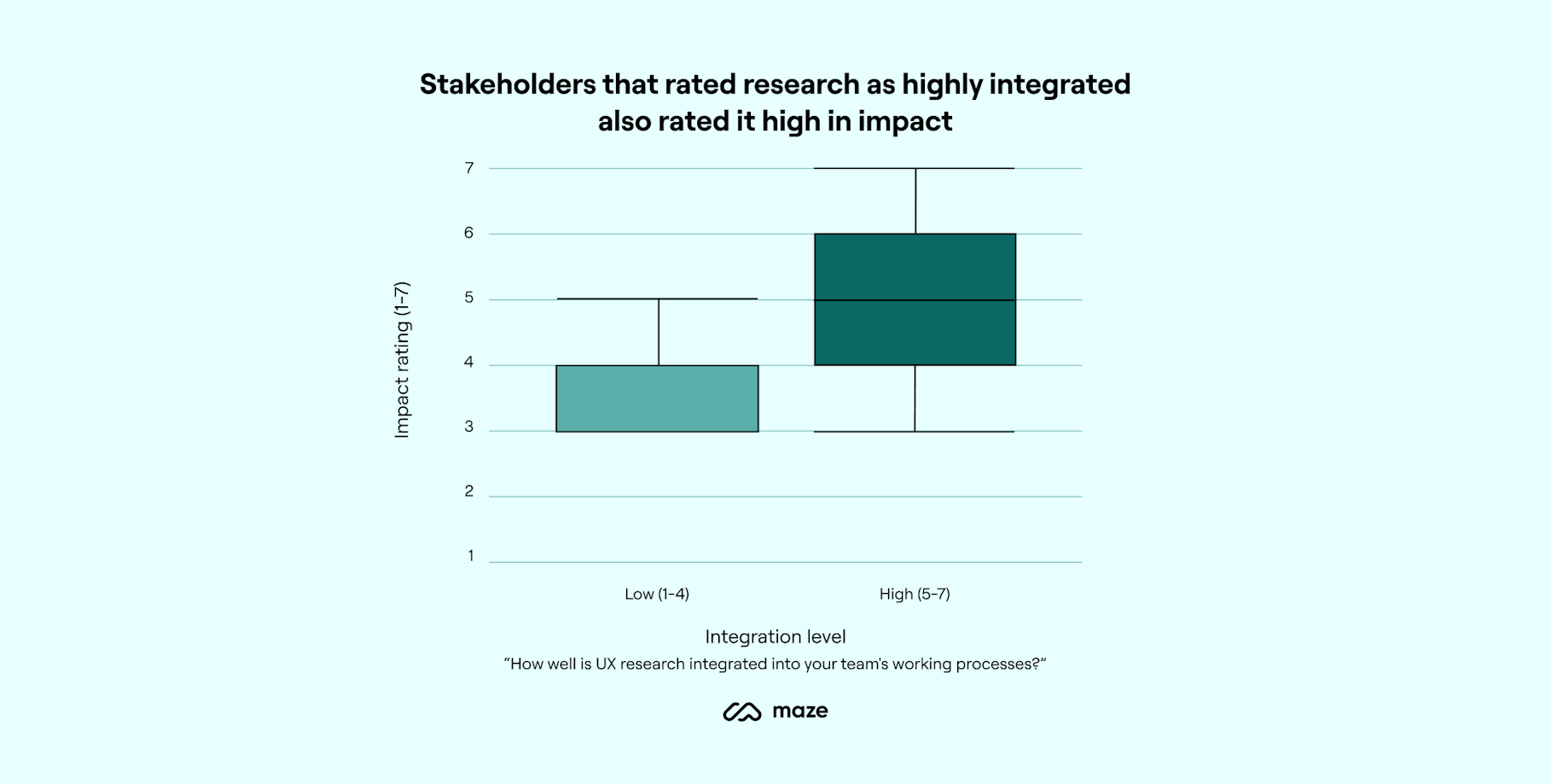

Our research showed that integration of research into decision workflows emerged as a top priority for stakeholders. Those who rated research as “Highly Integrated” scored research impact 6.0/7, compared to 4.6/7 in poorly integrated environments—a 30% lift. So, what does integration mean?

Stakeholders came to interviews with a clear call to action: bring research in earlier. They want it embedded not just in design, but at the very start of decision-making and product development. While 79% of organizations include research during design, only 48% do so in the definition phase—exactly when the most strategic decisions are made.

Research as a practice vs a system

The language researchers and stakeholders use to describe integration is telling.

Researchers often focus on procedural and operational aspects. They see integration as a repeatable practice—structured, ongoing, and supported by guardrails that preserve rigor, much like design or QA.

By contrast, stakeholders are more outcome-oriented and decision-driven. For them, integration means having research present in the moment of decision-making. They want insights that are timely, strategically relevant, and capable of shaping choices—while recognizing research is just one input among many.

Notably, researchers rated themselves higher than stakeholders on integration efforts, suggesting they may overestimate their effectiveness. Moving toward an expanded mandate—where research becomes part of the organizational DNA rather than a side project—requires a more flexible, systematic approach. Methodologies need to scale up or down to match the criticality and pace of decisions. As one VP of Product put it: “Shift from perfection to progress. Sometimes a 70% answer quickly is more valuable than a 100% answer too late.”

This desire for researchers to broaden their scope while becoming more integrated presents a chicken-and-egg problem. Few researchers would argue against participating in early stages of decisions and more extensively across the product ecosystem. But access can be an issue. Is trust the prerequisite for integrating upstream, or does integration itself create the trust that secures researchers a seat at the table?

“We lose opportunities because the research arrives after decisions are already made.”

Executive

“Be involved earlier in projects, near the grassroots stages, so we’re not filling them in later.”

UX Researcher

“Don’t just validate, shape the initial scope.”

Executive

“Early research shapes the what and why; late research just validates the how.”

Designer

“We lose opportunities because the research arrives after decisions are already made.”

Executive

“Be involved earlier in projects, near the grassroots stages, so we’re not filling them in later.”

UX Researcher

The right language: Insights that drive change

Researchers as change agents

There’s good news for researchers who want their work to have strategic impact. Stakeholders in high-impact organizations consistently value research that is strategically relevant. By contrast, not a single stakeholder in low-impact organizations rated strategic relevance as a must-have. We hypothesized that trustworthiness was the gateway to strategic involvement, and our findings confirm it. 89% of stakeholders rated trust as critical, but defined it in different terms than their research patterns.

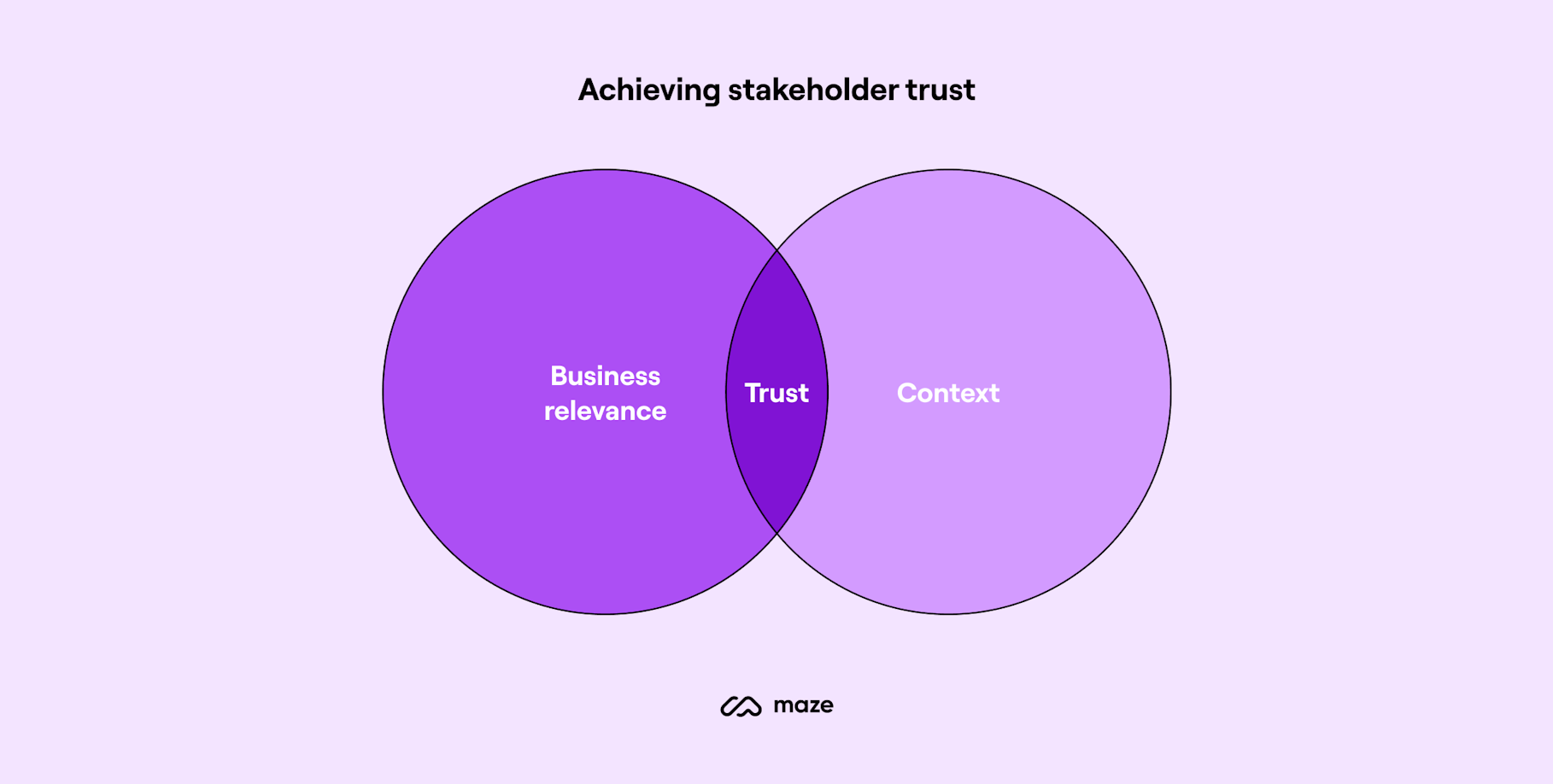

Stakeholders: Trust = context + business relevance

They're not just evaluating methodology; they emphasize that insights need to be useful in context, delivered on time, and tied to business outcomes.

Researchers: Trust = rigor + accuracy

Respondents emphasized the importance of sample size, mixed methods, validity, and accurate reporting. One UX researcher explained, “Rigor and sample size are how I know the study is credible.”

This divide in definition matters.

Consistent with other studies we’ve run, stakeholders of research value a combination of qualitative insight backed by quantitative support. As above, methodological rigor is table-stakes for critical decisions. While stories make insights relatable and actionable, numbers build credibility.

“Back your recommendations with quantitative data, not just quotes.”

Product Manager

“Trust means you understand our constraints. Don’t give me blue-sky solutions.”

Executive

“We look for both the qualitative and quantitative evidence to socialize with different groups in the company.”

Executive

However, stakeholder verbatims and in-depth interviews added color to the notion that trustworthiness isn't only earned through methodological accuracy, but rather through insights that are relevant within their specific business context. As one stakeholder explained: "To read and understand the product and the organizations more before trying to work or propose any kind of solutions that would make them more trustworthy and credible."

Context, storytelling, and a tie to outcomes are the keys to delivering research effectively.

Expanding the frame

Stakeholders value researchers who demonstrate both methodological expertise and a deep understanding of the specific organizational context. This balanced approach builds credibility by showing researchers can apply best practices while respecting business realities. One participant noted that effective UX researchers need to "read and understand the product and the organization more before trying to work or propose any kind of solutions," indicating that context knowledge is a prerequisite for trustworthiness.

Stakeholders discussed living in worlds with constraints: technical, regulatory, time, cost, and business priorities. Research was often presented without acknowledging these constraints and, therefore, missed the mark. A participant valued when UX researchers "discuss those things with the technical team before presenting the business" to ensure proposed solutions were technically feasible. If researchers are experts at seeing through the eyes of users, the challenge is to also see through the eyes of their internal partners.

“If I could offer one key piece of advice to UX researchers, it would be to always contextualize findings within our specific business constraints and technical realities.”

Product Leader

Simple, balanced, storytelling

Researchers and stakeholders agree that “digestible” insights are important. Stakeholder feedback suggests room for improvement in delivering in formats that present a straightforward, compelling narrative with clear business implications. As one participant described, this involves "a storyline that starts with a few intriguing findings and builds to a crescendo" highlighting key opportunities, threats, and next steps.

Stakeholders expressed a desire for research that balances multiple perspectives rather than focusing solely on problems. They acknowledge product strengths that "resonate with the customer," identify areas "falling short" of expectations, and articulate key risks or "watch outs" to consider for future development.

Moreover, stories need to be told at different levels. While executive presentations might focus on high-level insights and business implications, technical teams might receive more detailed implementation considerations.

“Ensure reports have an easily readable and understandable section—at the beginning that itemizes the results and benefits of implementing their findings. Not all decision makers have a background in data/research analysis; therefore, simple reports are better.”

Executive Director

“Take the jargon out of the results you present.”

SVP Strategy

“Good marketing of the findings is just as important as doing the research.”

Ops Manager

Connected to outcomes

Research that translates findings into business metrics gains far more traction. Stakeholders repeatedly encouraged researchers to proactively connect their findings to financial outcomes rather than assuming stakeholders will make these connections themselves. As one participant advised, researchers need to "answer the so what question" by articulating "why a particular finding is relevant" to business decisions.

“Always make a compelling slide deck on why your research is useful after the research project.”

VP of Marketing

“Answer the so what. Tell me why it matters to growth and profitability.”

Executive

“We saved hundreds of thousands by replacing a complex feature with a simple field.”

UX Researcher

“People that are more likely to try the product, pay more, and spend more —that’s what justifies investment.”

Product Marketing Manager

"The most effective researchers I've worked with understand our development cycles, can speak to both user needs and business impact, and provide implementation guidance that acknowledges technical debt and resource limitations while still advocating for the user experience.”

Product Marketing Manager

“Always make a compelling slide deck on why your research is useful after the research project.”

VP of Marketing

“Answer the so what. Tell me why it matters to growth and profitability.”

Executive

The new research operating model

Our research reveals a telling irony: UX research has earned deep credibility in product organizations—but many researchers risk prioritizing methodological purity over a more critical goal: improving the experience of their users, the stakeholders of research.

The researchers who will drive the profession forward are those who embrace a broader mandate (perhaps even moving beyond the “UX” label). Their focus will shift from running studies in isolation to building systems that position research as a true business capability—delivering insights at the right time, in the right place, and in the right language.

The playbook

Elevate strategically

Deeply understand the business context and connect research findings to ROI, business risks, and competitive advantage.

Embed early and often

Move research upstream and build versatile models for delivering timely insights across the organization.

Scale with credibility

Democratize access with versatile tools and methods by involving stakeholders, embracing mixed methods, and investing in AI acceleration.

“Our organization prides itself on experience as a competitive advantage. The user experience is of critical importance as the value and return on that product.” — Executive

About Maze

Maze is the user research platform that makes products work for people. Maze empowers any company to build the right products faster by making user insights available at the speed of product development.

Methodology

This study was designed to understand how UX research is valued and used inside organizations, with a focus on the differences between researchers and stakeholders.

Sample

- 82 UX Researchers (research leads, research managers, senior UXRs) from a mix of startups, growth-stage companies, and large enterprises

- 56 Stakeholders (product managers, designers, executives, marketing leaders) who work with research regularly

- Combined sample: 138 respondents across both groups

Data Collection

- Online surveys administered in 2025

- Participants recruited via professional networks and outreach targeting research practitioners and their cross-functional partners

- Surveys included both closed-ended questions (1–7 Likert scales on impact, trust, timeliness, integration, organizational support, etc.), card sorts to identify the qualities that are believed to be critical to a successful UX research practice and open-ended questions soliciting advice, observations, and verbatim examples

- In addition, 9 in-depth interviews were conducted with product, design, and executive stakeholders to add qualitative depth

Analysis

- Quantitative analysis included descriptive statistics and mean comparisons across groups

“Democratization” was coded using responses to:

- “Who conducts research in your organization?”

- Coded as democratized when stakeholders as well as UX researchers conducted research; researcher-only otherwise

Frequency of use was measured by:

- “How often are research findings used in your decision-making?”

- Monthly+ usage was treated as the threshold for consistent adoption

- Qualitative analysis applied thematic coding to open-ended survey responses and interview transcripts, identifying patterns around communication, integration, trust, and organizational culture

Limitations

- Non-democratized organizations were a small subset (≈12 stakeholders), so those comparisons are directional rather than statistically robust

- Stakeholder sample was skewed toward organizations already investing in UX research, which may bias results upward in perceived value

- Expanded social recruitment datasets (n=37) were used only for exploratory themes, not included in the primary statistical analysis due to quality variance

- Findings should be interpreted as indicative patterns, triangulated across survey and interview data, rather than definitive causal claims

This playbook was made possible in partnership with Jane Davis (formerly Maze). Explore Jane's profile here.